How to use Amazon Prime’s new ‘Dialogue Boost’ feature?

One of the most annoying situations when watching TV is when you are able to hear the explosions and background music of a particular scene but not the speech. Dialogue Boost, please.

In order to prevent consumers from being compelled to enable subtitles even when they don’t want to, Amazon Prime Video is introducing a new accessibility tool that enables users to boost the volume of dialogue without adding background music or effects.

Raf Soltanovich, the vice president of technology at Prime Video and Amazon Studios, stated in a statement, “At Prime Video, we are committed to delivering an inclusive, egalitarian, and pleasant streaming experience for all our consumers.

Also Read: What is Google Help Me Write?

“Our library of captioned and audio-described content keeps expanding, and by utilizing our technological capabilities to develop industry-first innovations like Dialogue Boost, we are taking another step towards creating a more accessible streaming experience,” the company said.

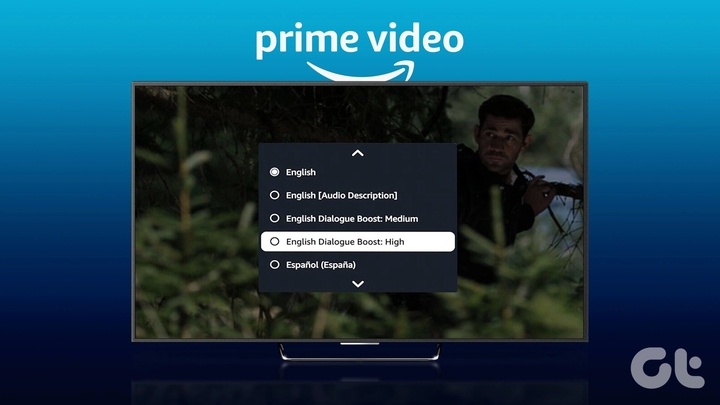

In order to use the feature choose a movie/series that offers this feature. You may check the title’s detail page if this feature is available. Start the movie and click the drop-down menus for the audio and subtitles on your screen. From the available options, select the Dialogue Boost level that you desire.

Based on how much you want to strengthen the dialogue, you can choose between “English Dialogue Boost: Medium” and “English Dialogue Boost: High”. You can choose between the Low, Medium, or High Dialogue Boost tracks.

Try them all out to determine the one that works most effectively for you since each level gives different levels of amplification, as their names suggest. Take pleasure in the title’s louder and more understandable dialogue.

Also Read: How is the new Google AI search different from the Bard chatbot?

In order to distinguish the conversation from the background noise in a movie or television show, it looks for instances where it is being overpowered by other sounds. Later, it uses AI to raise the dialogue’s quality.

In this manner, Dialogue Boost does not amplify the entire sound of the dialogue, simply the portions that require it before it is made available more broadly, the feature will first be made available globally for a select group of Amazon Original programs, including “Tom Clancy’s Jack Ryan,” “The Marvellous Mrs. Maisel,” and others.

It is accessible on all platforms that Prime Video supports. Even though Amazon is the very first global streaming platform to offer this capability, other platforms, including Roku, also offer a feature called “speech clarity.”

I am a law graduate from NLU Lucknow. I have a flair for creative writing and hence in my free time work as a freelance content writer.